The HIGHER project adheres to the Open Compute Project (OCP) Data Center modularity scheme for servers assembly (DC-MHS), which standardizes the form factor, connector placements and power requirements of the essential constituents of a server and make them easily interoperable and interchangeable. Figure 1 shows the conceptual high-level diagram from OCP:

Figure 1 OCP high-level diagram on Data Center modularity scheme for servers assembly

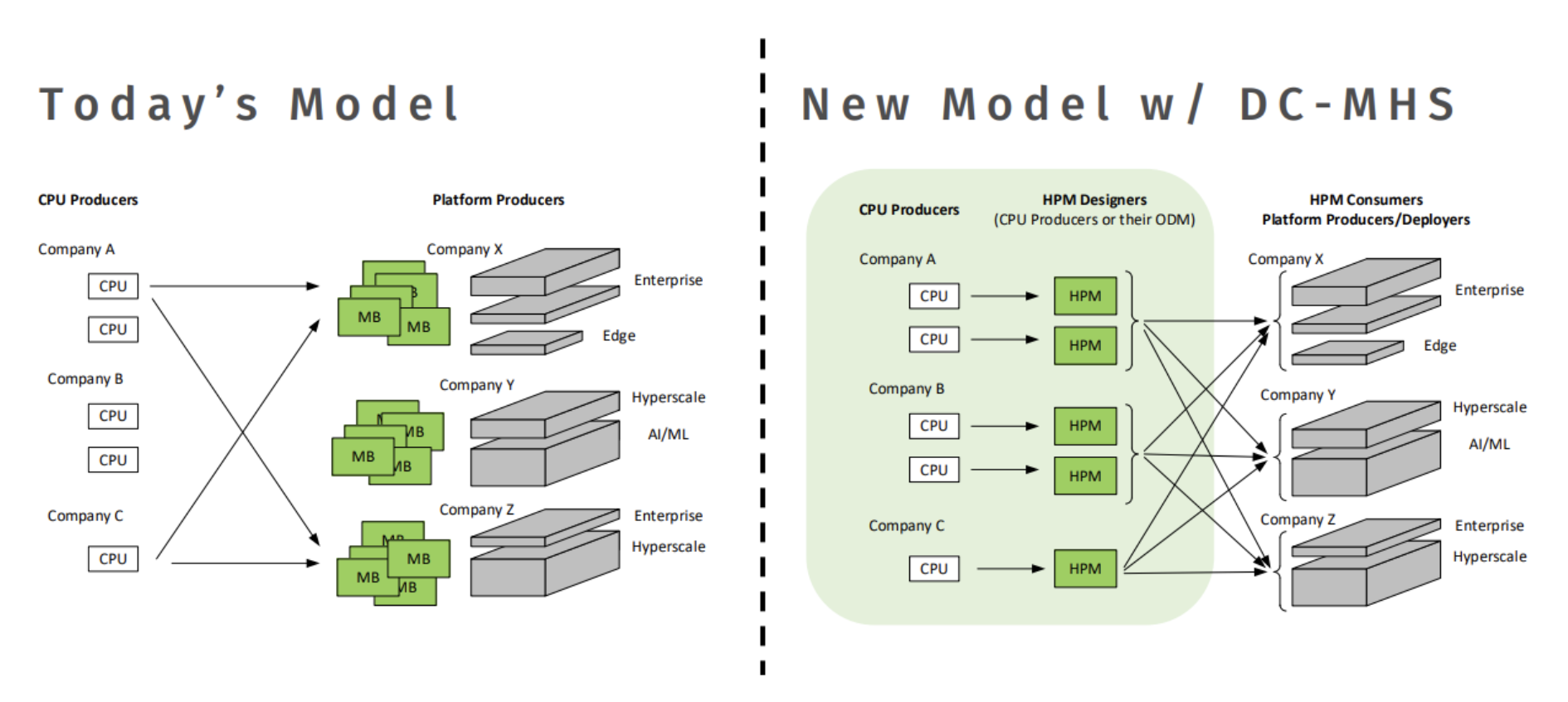

In this context, the HIGHER project partners are building a collection of these DC-MHS servers through the assembly of Host Processor Modules (HPMs) and Management Modules (DC-SCM), which are also being developed in the scope of the HIGHER project.

SiPearl’s contribution focuses on the design of two types of HPMs, both incorporating the next generation of EPI processors, namely Rhea2. These processors feature a dual chiplet solution connected through Universal Chiplet Interconnect Express (UCIe) dis-to-links, providing more than 96 functioning Arm Neoverse V3 cores.

Both SiPearl HPMs are compliant with Scalable DenSity Optimised (SDNO ) form factors, which is the latest version made available by OCP DC-MHS, as the successor of previous M-FLW form factor.

To maximize the use cases explored, SiPearl will develop a single socket HPM (SDNO Class A335), as well as a dual socket HPM (SDNO Class C335). The latter provides optimized chip to chip performance and more targeted to HPC use cases.

These HPM will be integrated in the chassis developed by 2CRSi, and combined with: (i) the other HPM developed by Exapsys in the framework of the HIGHER project, which integrates multiple instances of EUPilot chips, as well as (ii) the custom Management Module (DC-SCM) developed by Exapsys and Forth.

Figure 2 highlights how the SiPearl HPMs are integrated in the DC-MHS server to improve flexibility and maximize reusability:

Figure 2 SiPearl HPMs integrated in the DC-MHS server

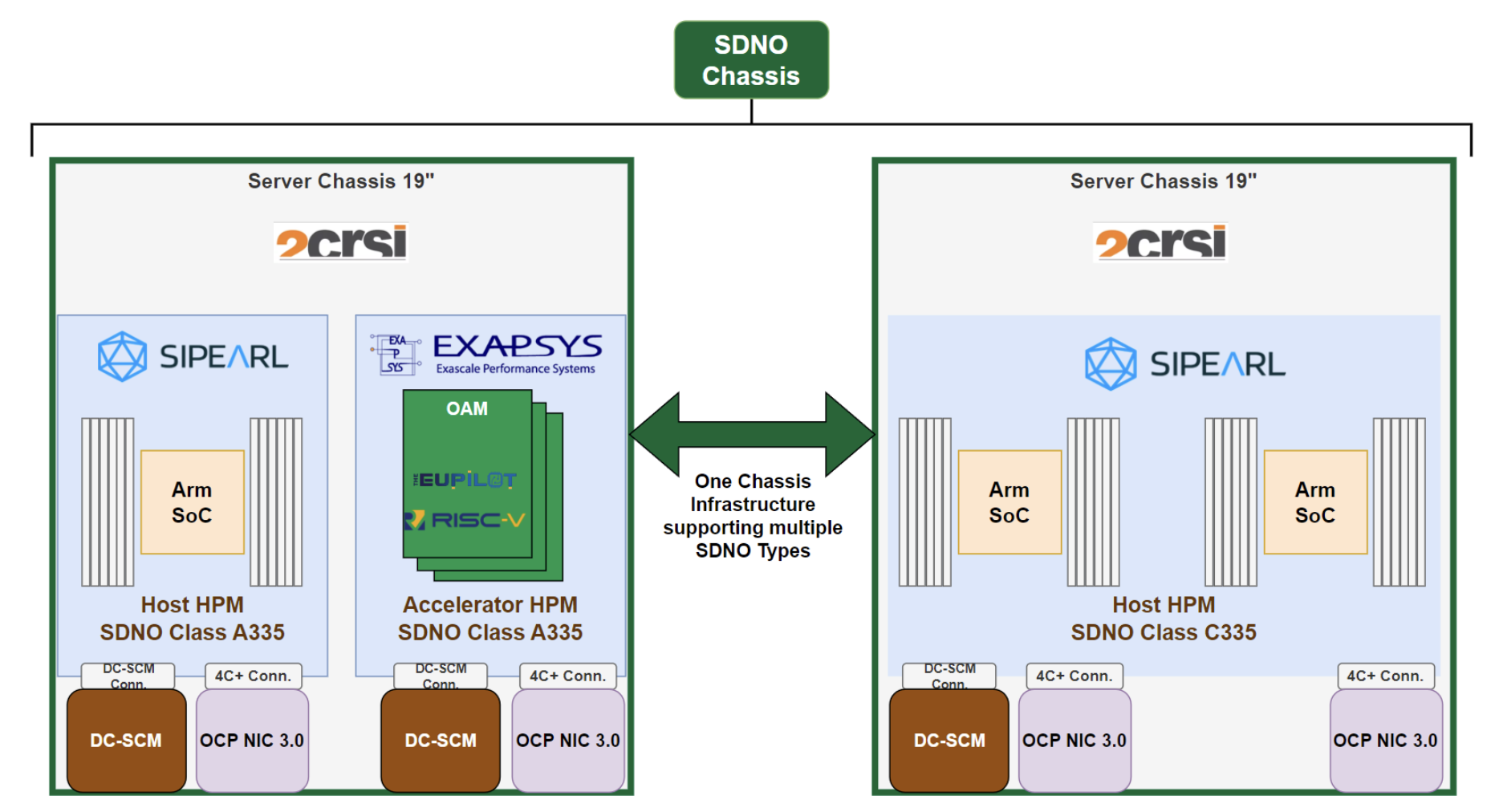

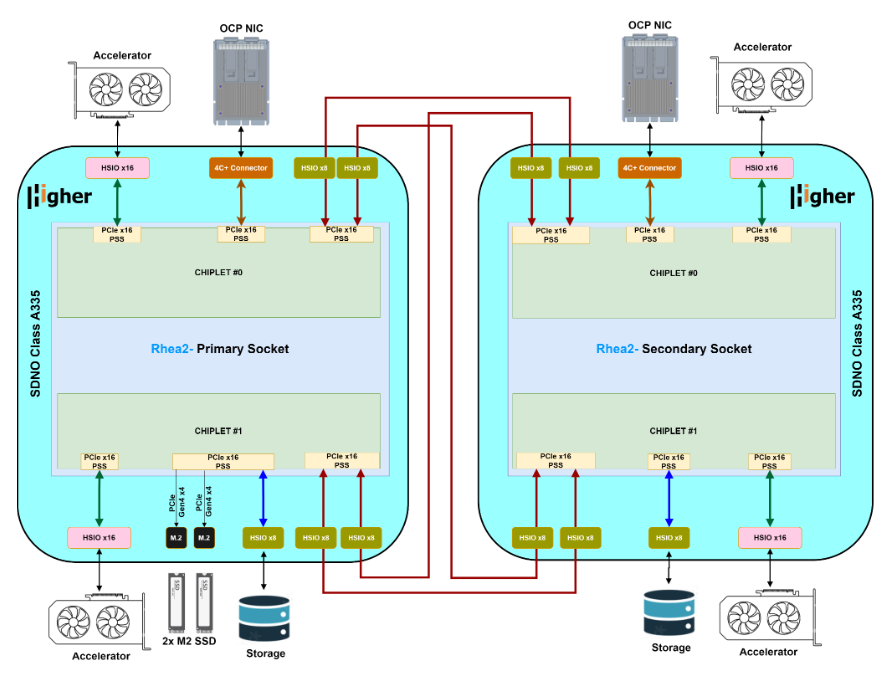

Figure 3 provides an overview of the SDNO Class C335 HPM in a typical configuration when combined with accelerators with a ‘all to all’ interconnect between the x4 chiplets involved, optimized for HPC use cases:

Figure 3 SDNO Class C335 HPM in a typical configuration when combined with accelerators with a ‘all to all’ interconnect between the x4 chiplets involved

Similarly, a similar node organization can be achieved by using 2 SDNO Class A335, back to back, as shown in Figure 4.

Figure 4 Two SDNO Class A335, back to back

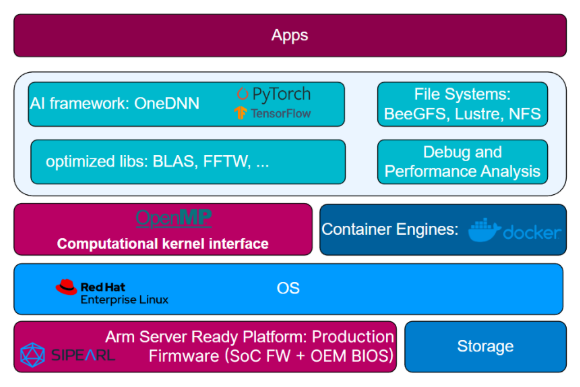

Besides the hardware modules, SiPearl will also provide a complete software stack that can be leveraged for HPC or AI applications (Figure 5).

Figure 5 SiPearl’s complete software stack for HPC or AI applications

The DC-MHS servers, based on SiPearl’s HPMs and associated software stack, will be involved in a variety of use cases that will be addressed by SiPearl together with partners such as BSC and FORTH. These cases include:

- Accelerated Data Processing: Demonstrates the advantage of the Rhea2 based server and its software stack for accelerating use case performance on different cloud servers utilizing Rhea2 (ARM) host CPUs and acceleration in ARM scalable vector units through the use of a set of representative micro kernels extracted from applications, such as scoring function for molecular docking, graph analytics, and ML inference

- IaaS: Demonstrates the performance and efficiency parity of Rhea2 based servers with mainstream x86 based cloud server platforms by benchmarking in scenarios where I/O operations are critical, such as storage-intensive applications

- PaaS: Demonstrates a complete development and deployment environment supporting large-scale data processing for HPC, ML inference and data analytics, including utilization of multiple Rhea2, possibly combined with RISC-V accelerators as integrated on the Exapsys HPM. To fully exploit the capabilities of the multi-devices, HIGHER will continue the work already started in the RISER project and adopt the reached status in terms of the hierarchical parallelization strategy. In that context, SiPearl will advertise an OpenMP infrastructure able to offload data and code to different devices

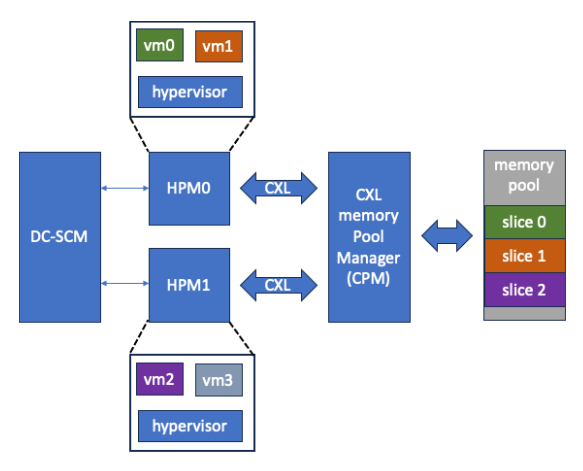

- Remote CXL-based disaggregated memory: The Rhea2 based HPMs will also be used to demonstrate advanced memory pooling leveraging CXL-based links and offering a dynamic per-VM memory allocation

Figure 6 CXL-BASE DISAGGREGATED MEMORY

In anticipation of the constantly improving needs of renewable security, complementing device ownership transfer and circular economy, SiPearl will also explore advanced integration of the Rhea2 root of trust with the Root of Trust for Measurement system (Caliptra from OCP), hosted on the HIGHER DC-SCM.